Student: Jônatas de Souza Nascimento

Advisor Professor: Prof. Dr. Artur Jordão

Convolutional Neural Networks (CNNs) are renowned for their proficiency in computer vision tasks, particularly in image classification. However, advancements in CNN models coupled with the demand for higher image resolutions have led to escalated computational costs, thereby impacting their portability and the requisite computational resources. This study investigates the influence of image resolution on CNN performance, seeking an equilibrium between accuracy and the associated memory and processing demands. The ultimate aim is to reduce the number of network parameters, thus optimizing CNNs for use in devices with limited resources, such as mobile phones.

Research Questions

1. Is it possible to reduce the resolution of a trained Neural Network model and keep its predictive capacity?

2. Is it possible to systematically encounter a resolution for which the model keeps its predictive ability?

Answering these questions enables us to reduce the computational cost of Convolutional Neural Network based solutions by simply reducing the resolution of pre trained models

We use two well-known benchmarks, CIFAR-10 and ImageNet, and popular CNN architectures: NasNet, ResNet, and MobileNet

Main Experiments

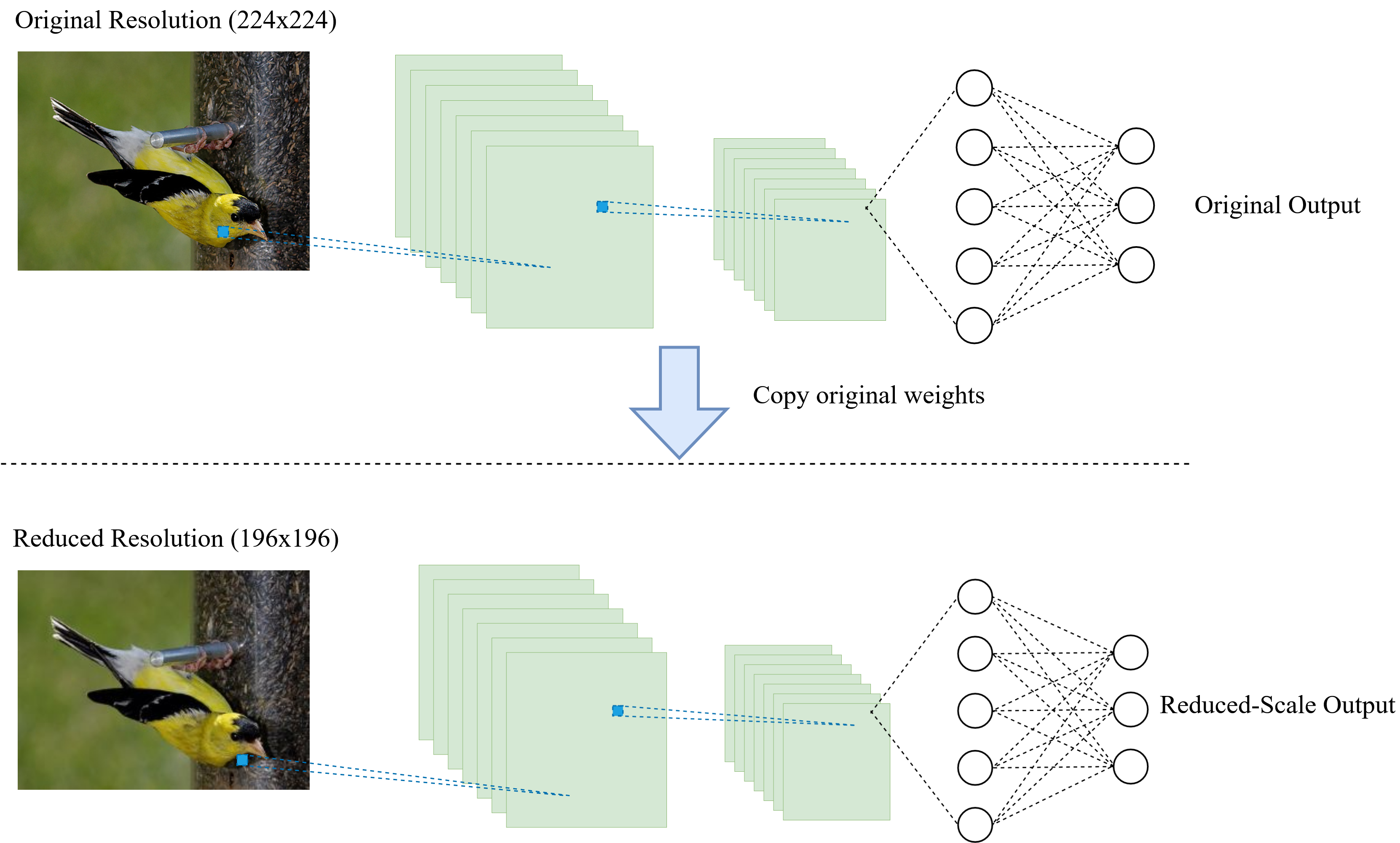

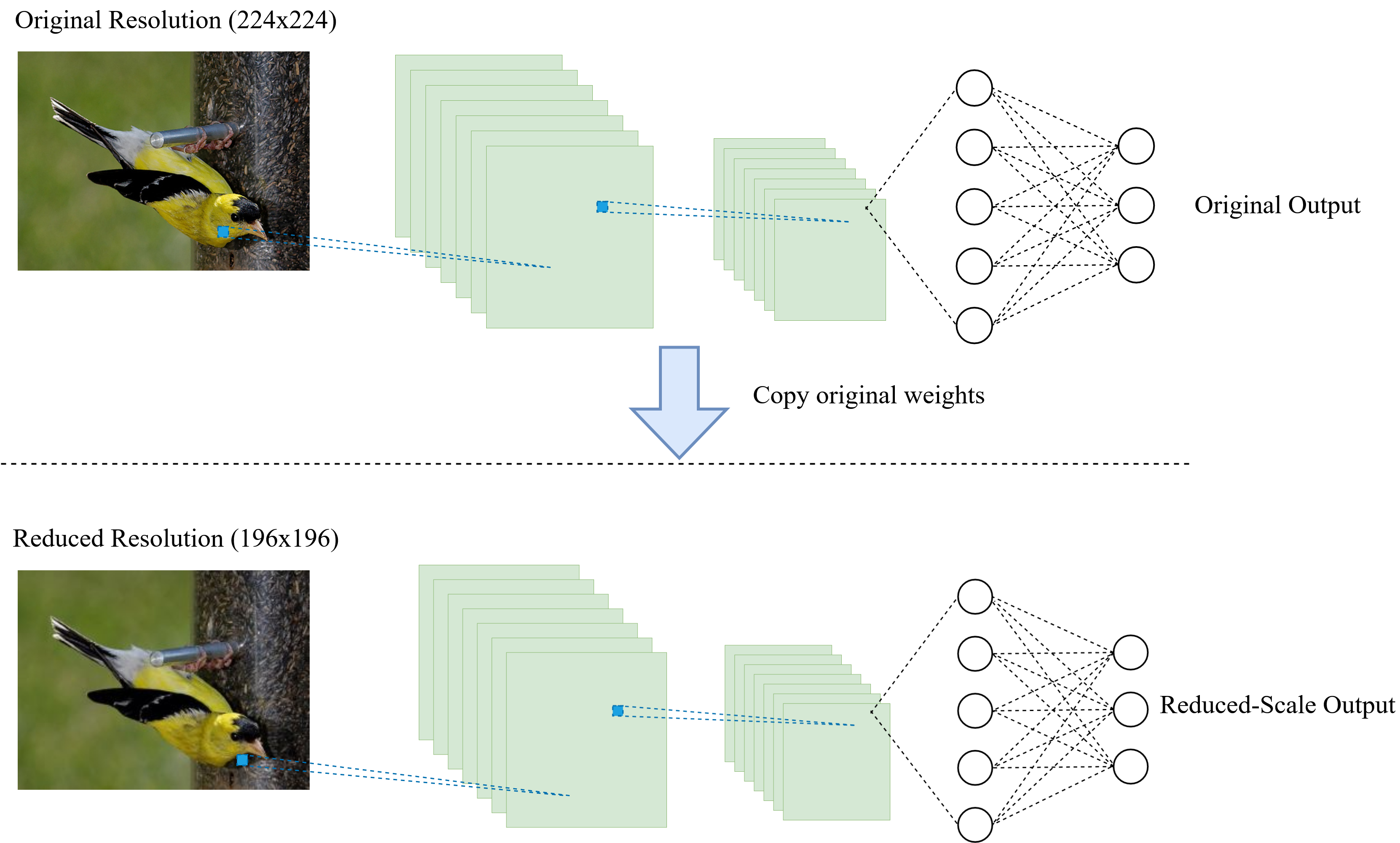

1. Reduce the resolution of pre-trained CNNs and evaluate the floating points operations (FLOPs) and accuracy compared to the original model

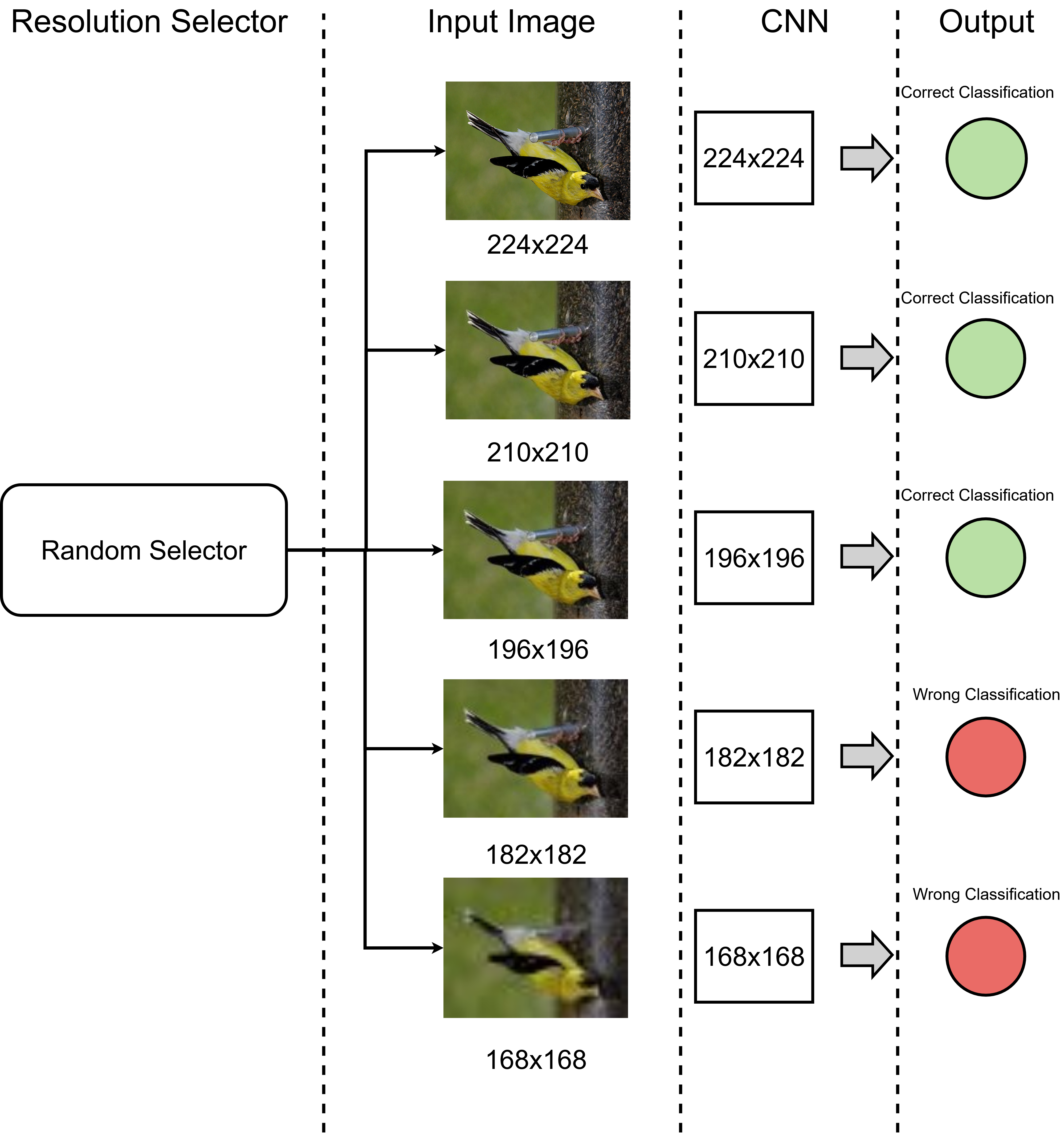

2. Select the resolution randomly from a set of pre-defined resolutions, and evaluate the FLOPs and accuracy compared to the original model

Resolution Reduction Diagram

Random Resolution Selection Diagram

NasNet on CIFAR-10

| Resolution | FLOPs Drop (%) | Accuracy Drop (%) |

|---|---|---|

| 28 x 28 | 23.43 | 1.92 |

| 24 x 24 | 43.74 | 4.34 |

| 20 x 20 | 60.93 | 13.32 |

| 16 x 16 | 74.99 | 29.58 |

| 12 x 12 | 85.93 | 52.40 |

ResNet on ImageNet: Comparison with existing works

| Resolution | FLOPs Drop (%) | Accuracy Drop (%) |

|---|---|---|

| (HE, Y.; ZHANG, X.; SUN, J.) | 20.00 | 1.70 |

| (WANG et al., 2018) | 20.00 | 2.00 |

| (HE et al., 2018) | 20.00 | 1.40 |

| Ours (210 x 210) | 3.90 | 1.22 |

| Ours (196 x 196) | 14.72 | 2.90 |

| Ours (182 x 182) | 29.26 | 2.70 |

| Ours (168 x 168) | 38.49 | 5.13 |

| Ours (154 x 154) | 49.50 | 6.57 |

| Ours (140 x 140) | 57.26 | 10.72 |

| Ours (Random Resolution) | 17.30 | 2.42 |

• The experiments performed on ImageNet dataset suggest that it is possible to reduce the CNN resolution with minor reduction on its predictive capability

• These experiments achieved significant reductions in FLOPs with a minor drop in accuracy

• Random selection of model resolutions in experiments showed FLOPs reduction comparable to other methods in literature, with minimal accuracy loss

• There is potential for developing a more refined method for selecting the scale of Neural Networks

[1] HE, Y ZHANG, X SUN, J Channel pruning for accelerating very deep neural networks In Proceedings of the IEEE international conference on computer vision [S.l.:s.n.], 2017

[2] WANG, X et al Skipnet Learning dynamic routing in convolutional networks In Proceedings of the European Conference on Computer Vision [S.l.:s.n.], 2018

[3] HE, Y et al Filter pruning via geometric median for deep convolutional neural networks acceleration In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition [S.l.:s.n.], 2019